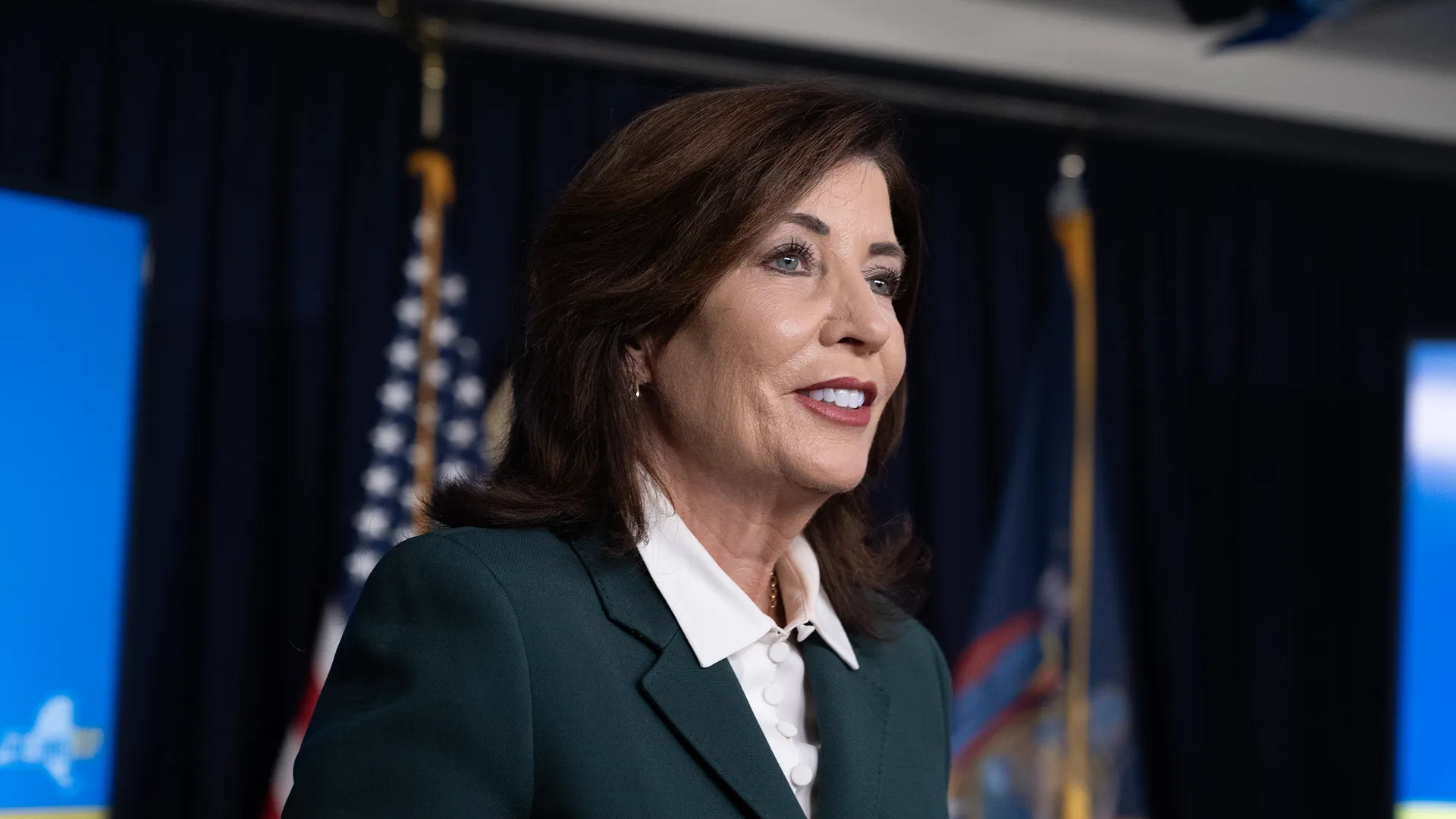

New York Governor Kathy Hochul signed the Responsible AI for Social Equity (RAISE) Act on December 20, 2025, making New York the first state to implement comprehensive AI safety regulations. This landmark legislation establishes strict guidelines for AI development, deployment, and monitoring, potentially setting a template for federal AI regulation.

The RAISE Act: Key Provisions and Requirements

The RAISE Act introduces several groundbreaking requirements for AI systems operating in New York:

- Algorithmic Impact Assessments: Mandatory evaluations for AI systems used in high-risk applications including healthcare, criminal justice, employment, and financial services

- Bias Testing Requirements: Regular auditing for discriminatory outcomes across protected classes

- Transparency Mandates: Public disclosure of AI system capabilities, limitations, and decision-making processes

- Human Oversight Standards: Requirements for meaningful human review in critical AI-driven decisions

- Data Protection Measures: Enhanced privacy protections for data used in AI training and operation

“This legislation positions New York as a leader in responsible AI governance,” said Governor Hochul during the signing ceremony. “We’re ensuring that as AI transforms our society, it does so in a way that protects our citizens and promotes equity.”

Industry Response: Mixed Reactions

The AI industry’s response to the RAISE Act has been divided, reflecting broader tensions between innovation and regulation:

Tech Giants Express Concerns:

Major technology companies have raised concerns about compliance costs and potential innovation barriers. Google, Microsoft, and Meta have all issued statements calling for “balanced regulation that doesn’t stifle technological progress.”

AI Startups Worry About Costs:

Smaller AI companies are particularly concerned about the financial burden of compliance. “These requirements could cost us hundreds of thousands of dollars annually,” said Sarah Kim, CEO of AI healthcare startup MedTech Solutions. “It may force us to relocate operations outside New York.”

Civil Rights Groups Applaud:

Civil rights organizations have praised the legislation as necessary protection against AI bias and discrimination. “This law ensures that AI serves all New Yorkers fairly,” said Marcus Johnson, director of the New York Civil Liberties Union’s Technology and Liberty Project.

Technical Implementation Challenges

The RAISE Act presents several technical challenges for AI developers and operators:

Bias Detection Complexity: Identifying and measuring bias in AI systems requires sophisticated testing methodologies that are still evolving. Companies must develop new frameworks for continuous monitoring across multiple demographic dimensions.

Explainability Requirements: The transparency mandates require AI systems to provide understandable explanations for their decisions, which is particularly challenging for complex deep learning models.

Compliance Monitoring: Companies must establish new processes for documenting AI system performance, maintaining audit trails, and reporting to state regulators.

Economic Impact and Market Dynamics

The RAISE Act is expected to have significant economic implications for New York’s tech sector:

Compliance Costs: Industry estimates suggest that large AI companies may spend $2-5 million annually on RAISE Act compliance, while smaller companies could face costs of $200,000-500,000.

Competitive Advantages: Companies that successfully implement robust AI governance may gain competitive advantages in other regulated markets and with enterprise customers prioritizing ethical AI.

Investment Implications: Venture capital firms are reassessing their New York AI investments, with some expressing concern about regulatory overhead while others see opportunities in compliance technology.

Regulatory Framework and Enforcement

The RAISE Act establishes a comprehensive regulatory framework:

New York AI Safety Board: A new state agency will oversee AI regulation, with authority to investigate complaints, conduct audits, and impose penalties.

Penalty Structure: Violations can result in fines ranging from $10,000 to $1 million, depending on the severity and scope of non-compliance.

Implementation Timeline: Companies have 18 months to achieve full compliance, with phased requirements beginning in June 2026.

National and International Implications

New York’s RAISE Act is likely to influence AI regulation beyond state borders:

State-Level Adoption: California, Massachusetts, and Illinois are already considering similar legislation, potentially creating a patchwork of state AI regulations.

Federal Influence: The Act may serve as a model for federal AI legislation, with several congressional committees studying its provisions.

International Alignment: The RAISE Act shares similarities with the EU’s AI Act, potentially facilitating compliance for companies operating in multiple jurisdictions.

Sector-Specific Impacts

Different industries face varying levels of impact from the new regulations:

Healthcare AI: Medical AI companies must demonstrate that their systems don’t exhibit bias across patient demographics, requiring extensive clinical validation studies.

Financial Services: Banks and fintech companies using AI for lending, insurance, or investment decisions face strict fairness requirements and regular auditing.

Criminal Justice: Law enforcement agencies using predictive policing or risk assessment tools must prove their systems don’t perpetuate racial or socioeconomic bias.

Employment Technology: HR tech companies must ensure their AI-powered hiring and evaluation tools provide equal opportunities across all demographic groups.

Technology Innovation and Adaptation

The RAISE Act is driving innovation in AI governance and compliance technologies:

Bias Detection Tools: New startups are emerging to provide automated bias testing and monitoring services for AI systems.

Explainable AI: Increased demand for interpretable AI models is spurring research into explainable machine learning techniques.

Compliance Platforms: Enterprise software companies are developing comprehensive AI governance platforms to help organizations manage regulatory compliance.

Challenges and Criticisms

Despite broad support for AI safety, the RAISE Act faces several criticisms:

Innovation Concerns: Some argue that strict regulations could slow AI innovation and put New York at a competitive disadvantage compared to less regulated jurisdictions.

Implementation Complexity: Critics question whether current technology can adequately measure and prevent AI bias across all applications.

Enforcement Challenges: Skeptics worry that the new AI Safety Board lacks the technical expertise and resources to effectively monitor compliance.

Looking Forward: The Future of AI Regulation

The RAISE Act represents a significant milestone in AI governance, but its long-term impact will depend on several factors:

Compliance Success: Whether companies can effectively implement the required safeguards without significantly hampering AI performance.

Economic Outcomes: The balance between regulatory costs and benefits, including potential improvements in AI fairness and public trust.

Regulatory Evolution: How the law adapts to rapidly evolving AI technology and emerging use cases.

Industry Adaptation Strategies

AI companies are developing various strategies to comply with the RAISE Act:

Proactive Compliance: Leading companies are implementing AI governance frameworks that exceed current requirements, anticipating future regulations.

Partnership Approaches: Many organizations are partnering with academic institutions and civil rights groups to develop fair AI practices.

Technology Investment: Significant resources are being allocated to developing bias detection, explainability, and monitoring capabilities.

Conclusion: A New Era of AI Accountability

The RAISE Act marks the beginning of a new era in AI regulation, where algorithmic accountability is no longer optional but legally mandated. While the law presents challenges for AI developers and operators, it also creates opportunities for companies that can successfully balance innovation with responsibility.

As other states and the federal government consider similar legislation, New York’s experience with the RAISE Act will provide valuable lessons about the practical realities of AI regulation. The success or failure of this pioneering law will likely influence the future of AI governance across the United States and potentially worldwide.

For the AI industry, the message is clear: the era of self-regulation is ending, and the age of mandatory AI accountability has begun.

For quality tech news, professional analysis, insights, and the latest updates on technology, follow TechTrib.com. Stay connected and join our fast-growing community.

TechTrib.com is a leading technology news platform providing comprehensive coverage and analysis of tech news, cybersecurity, artificial intelligence, and emerging technology. Visit techtrib.com.

Contact Information: Email: news@techtrib.com or for adverts placement adverts@techtrib.com